I am facing this problem with different scenarios: sometime (maybe 2% of all executions), a scenario is “stuck” in processing for a long time, often around 15 minutes. This is for scenarios that normally take only 10 sec.

It will eventually finalize without errors, and then processing time is shown as ~10 sec, i.e. it is not possible to see the issue from the execution log.

However, the issue is apparent as follows:

- I can see live that the scenario remains in processing for a long time (looking at the details, all steps are stuck in “processing”

- The outbound trigger at the end of the scenario is triggered with a ~15min delay.

Surprisingly, the issue also does not show up on the status page: https://status.activepieces.com/. E.g. it occured today, but from the status page all looks fine (which is incorrect).

Anyone else with this problem, and how can this be fixed? Activepieces cannot be used for production if the behaviour is inconsistent and if issues are not made transparent.

I never had similar issues on make.com with similar scenarios.

Currently, I am AGAIN experiencing a scenario that has been running for 30 minutes without completion or response. This prolonged delay is quite concerning, especially given the critical nature of the tasks dependent on this workflow.

I am surprised by the lack of response or communication regarding this serious issue. The inability to rely on timely execution, as workflows are consistently idling for 15-30 minutes, severely impacts our operations.

Could someone please prioritize investigating this matter and provide a status update?

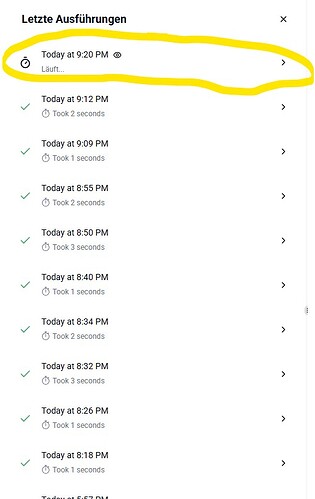

I have attached a screenshot, taken at 9:50 today, which shows that a process that normally only takes a few seconds, has been idling since 30 minutes.

Hi @Friend_of_automation,

That’s true. It has happened twice—once when we had 160k concurrent flow executions. Now, we have around 8,000. There are measures in place to handle such scale, but it was much larger than we anticipated. It will be executed soon.

The issue with current 8,000 flows most of them are heavy (takes couple of minutes to complete)

It should have been executed by now.

Thank you,

Mo

Hello Mo, @abuaboud

Thank you for your response and for providing context.

To better prepare for the future, I would need some additional clarification:

- Will measures be implemented to handle such scale more effectively in the future?

- Is there an anticipated timeline for these optimizations to be fully in place to prevent similar issues?

- Or are you saying that such long delays are to be expected, also in the future?

And yes, the scenarion was eventually executed after more than 30 minutes, it shows a runtime of just 3 sec, which is obviously not correct and should not be represented like this IMHO.