Hi Momo,

Yes that is correct and with a HTTP Request you can start the Actor. Let me try to create a static example on a custom Actor, but they all work the same way.

Receiving Data via a webhook and how to get the datasets

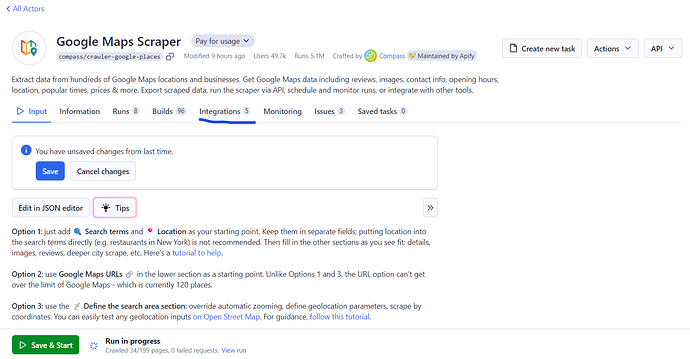

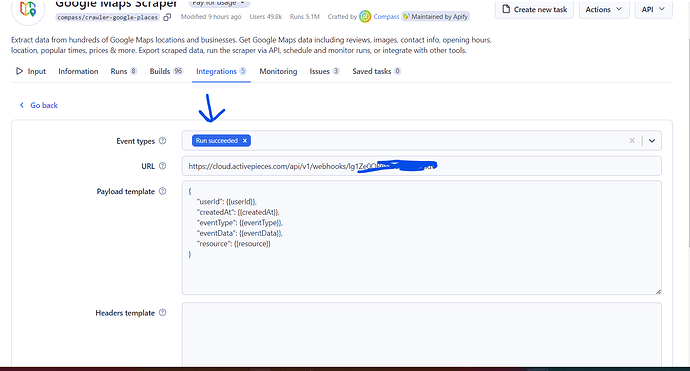

Go to your actor and go to Integrations:

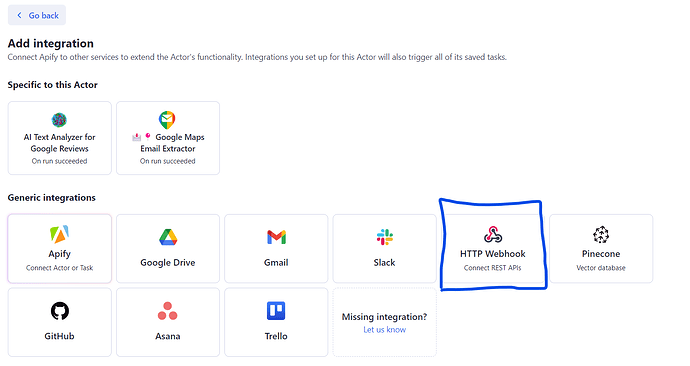

Hit this blue button

Select HTTPS Webhook

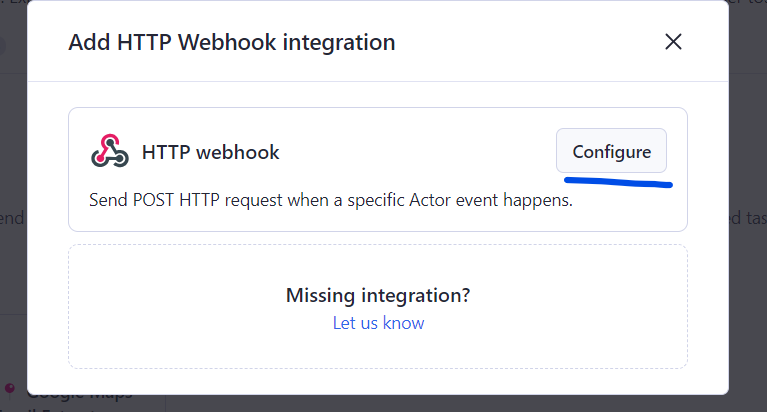

Press “Configure”

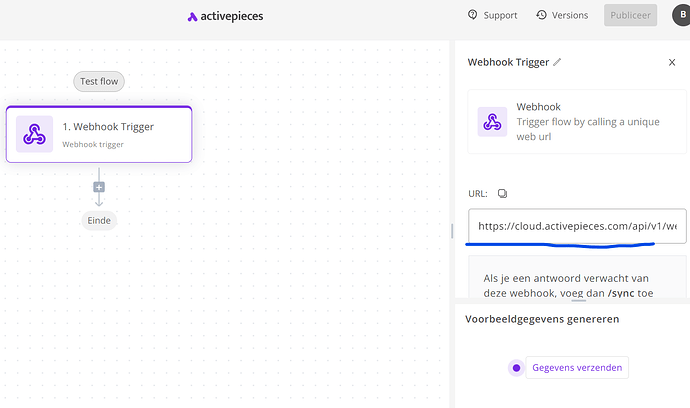

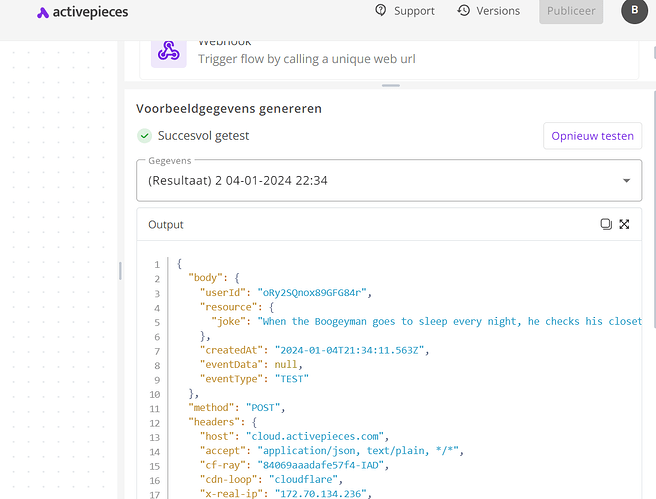

Now create a new flow in Active Pieces starting with a webhook and Copy the webhook URL

Set the event type to Run suceeded and Paste the webhook into the URL

Now go back to active pieces and you webhook and press “send data” with me it is “Gegevens verzenden”

Head back to APIfy and scroll down your actor and press save an test, Your webhook should receive something like this

Next add a new HTTP step to your flow and make it GET

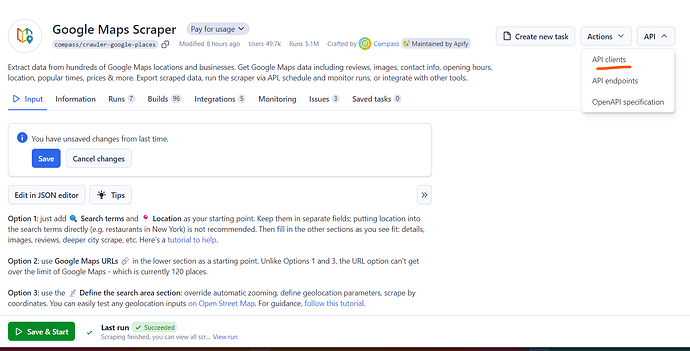

Triggering your Actor via Active Pieces

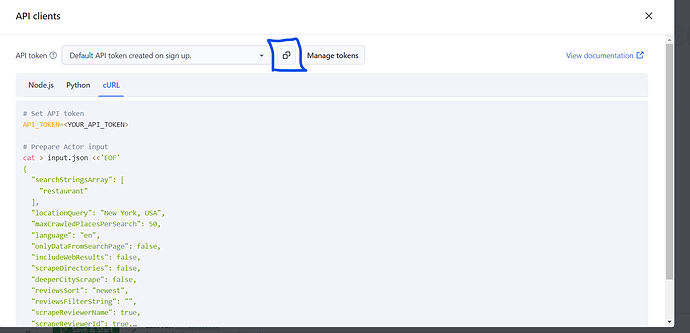

Select: API → API Clients

The click on cURL ans SCROLL DOWN!!!

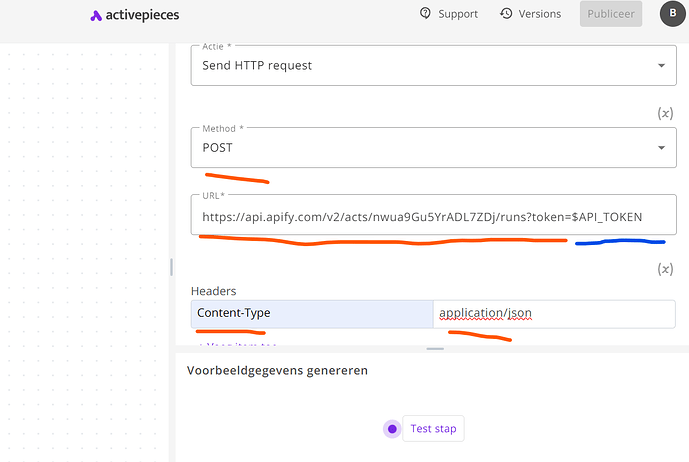

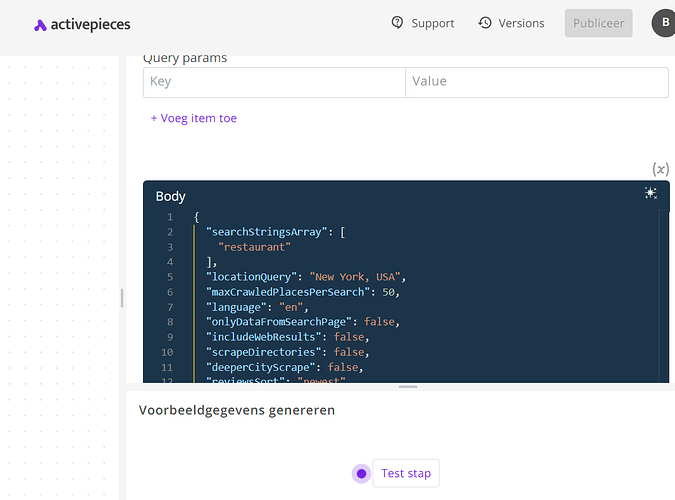

Use the URL, POST type and -h (header) and set them into Active Pieces.

The set-up in this case should look like this in Active Pieces

Please remove the “$API_TOKEN” from the URL and replace it with your token, found here, when you SCROLL UP in APIfy API Client:

Then you need to copy the Body from your API Client, in this case the green text starting from { and ending with }

{

“searchStringsArray”: [

“restaurant”

],

“locationQuery”: “New York, USA”,

“maxCrawledPlacesPerSearch”: 50,

“language”: “en”,

“onlyDataFromSearchPage”: false,

“includeWebResults”: false,

“scrapeDirectories”: false,

“deeperCityScrape”: false,

“reviewsSort”: “newest”,

“reviewsFilterString”: “”,

“scrapeReviewerName”: true,

“scrapeReviewerId”: true,

“scrapeReviewerUrl”: true,

“scrapeReviewId”: true,

“scrapeReviewUrl”: true,

“scrapeResponseFromOwnerText”: true,

“searchMatching”: “all”,

“placeMinimumStars”: “”,

“skipClosedPlaces”: false,

“allPlacesNoSearchAction”: “”

}

Should look like this in AP:

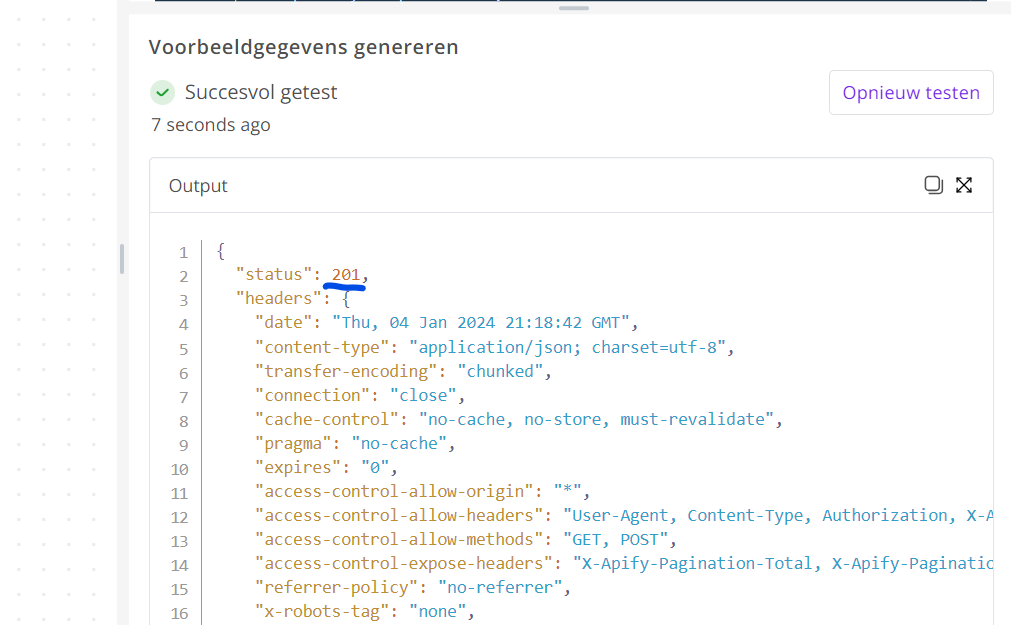

Then you can try it by testing the step.

This should be the result:

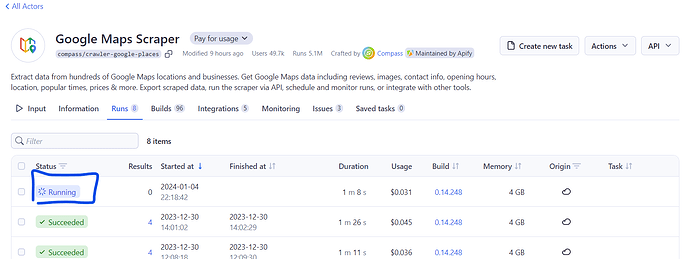

Then in APIfy you can see the Actor running:

To collect the scraped data in a webhook there are a few options available, I assume you will run this actor more often, and if not it doesn’t matter but this is the way to save more results.